Andrea Goethals is Digital Preservation Manager at the National Library of New Zealand

What We Learned by Playing Games at iPRES 2018

Over the last few years a group of us have been working on a list of criteria for storage that supports digital preservation (see version 3 at https://osf.io/sjc6u/). At the recent “Using the Digital Preservation Storage Criteria” iPRES 2018 workshop, we put the criteria to the test.

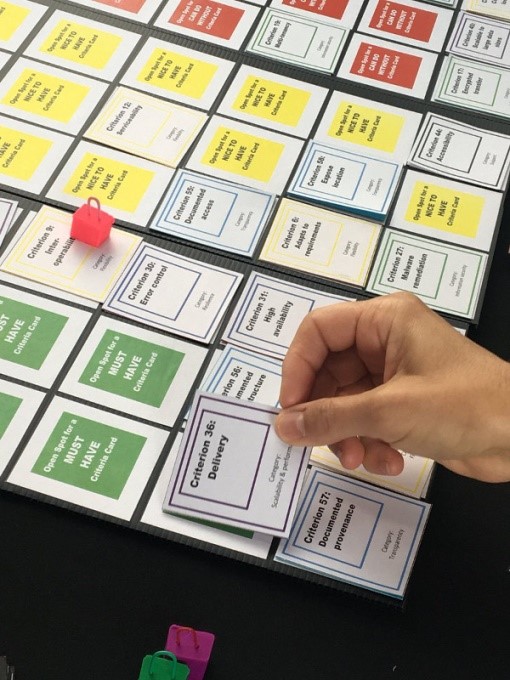

Figure 1: Image credit: Ashley Blewer (@ablwr)

We divided the participants into groups of 5-6 to play the Criteria Game. The game has the players rank the 61 criteria into one of three buckets: MUST HAVE, NICE TO HAVE, or CAN DO WITHOUT, and most importantly, to give a reason for doing so. There are only 21 spaces in each bucket, so tough decisions must be made. Reshuffling (reprioritizing) can happen during the game, but players can lock down a single criterion preventing other players from reprioritizing one they feel strongly about. A twist is that each player is randomly assigned a role, e.g., “you are from an archive with confidential and highly sensitive material”.

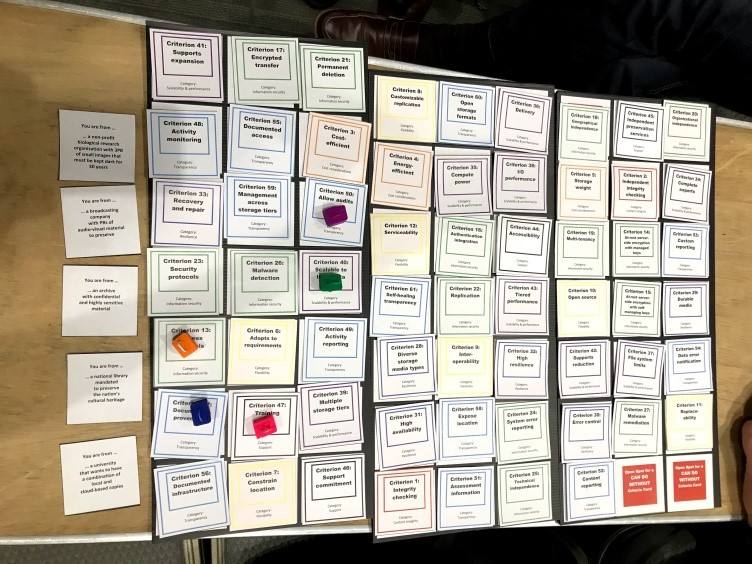

Figure 2: Results of one of the finished games. The first 3 columns are the MUST HAVEs, the second three columns the NICE TO HAVEs, and the last three the CAN DO WITHOUTs.

I analysed the results of 7 games played during iPres to see if they agreed or disagreed on the relative importance of the criteria, and digging deeper, which of the 61 criteria tended to be rated as more or less important by the 7 groups.

The results are available at http://bit.ly/2PzV72u.

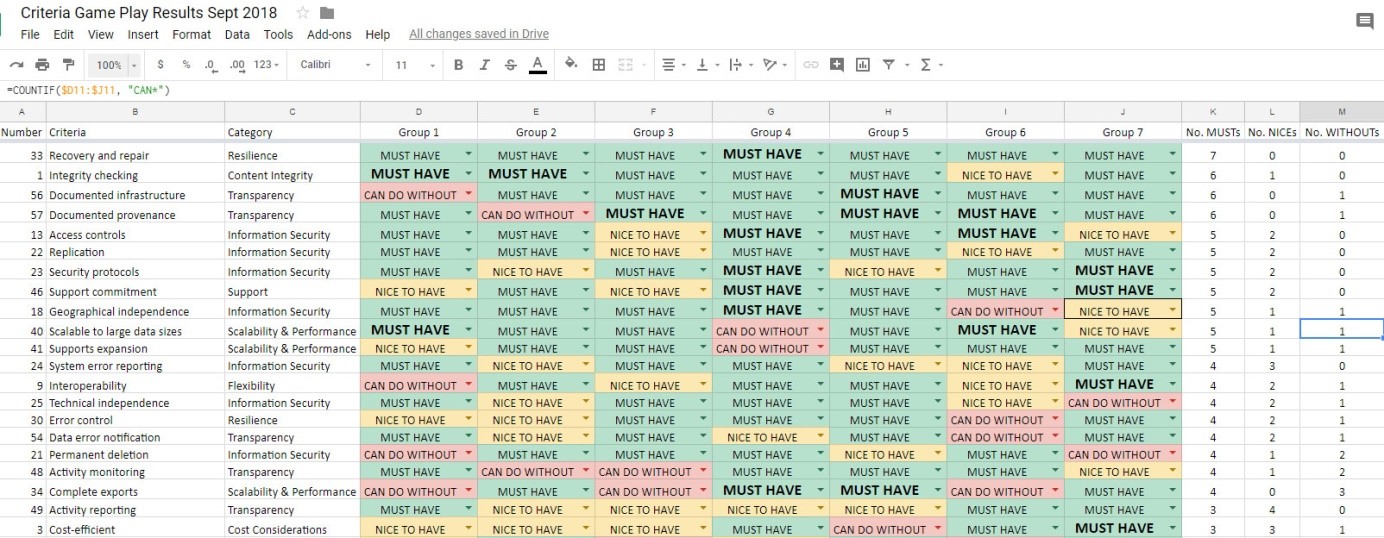

Figure 3: Partial screenshot of the game analysis. See the full spreadsheet at http://bit.ly/2PzV72u

Some patterns become apparent as you look at the results across the 7 groups.

Observation 1: The first 11 criteria in the sorted spreadsheet tended to be categorized as MUST HAVEs (see Table 1). Also note that many of the ‘locked’ criteria (those that people felt strongly about) are in this section.

|

Criteria |

MUST HAVEs |

NICE TO HAVEs |

CAN DO WITHOUTs |

|

Recovery and repair |

7 |

0 |

0 |

|

Integrity checking |

6 |

1 |

0 |

|

Documented infrastructure |

6 |

0 |

1 |

|

Documented provenance |

6 |

0 |

1 |

|

Access controls |

5 |

2 |

0 |

|

Replication |

5 |

2 |

0 |

|

Security protocols |

5 |

2 |

0 |

|

Support commitment |

5 |

2 |

0 |

|

Geographical independence |

5 |

1 |

1 |

|

Scalable to large data sizes |

5 |

1 |

1 |

|

Supports expansion |

5 |

1 |

1 |

Table 1: The criteria rated most important by the 7 groups.

Observation 2: The middle, roughly 2/3, section of the spreadsheet, contains the criteria that were ranked inconsistently across the games, e.g. important by some players, and unimportant by others.

Observation 3: The criteria in the last 11 rows of the sorted spreadsheet were consistently ranked as less important than the other criteria among the 7 groups. These criteria are listed in Table 2.

|

Criteria |

MUST HAVEs |

NICE TO HAVEs |

CAN DO WITHOUTs |

|

High availability |

0 |

5 |

2 |

|

Assessment information |

0 |

5 |

2 |

|

Independent preservation services |

0 |

4 |

3 |

|

Energy-efficient |

0 |

3 |

4 |

|

At rest server-side encryption with managed keys |

0 |

3 |

4 |

|

Customizable replication |

0 |

2 |

5 |

|

Open source |

0 |

2 |

5 |

|

Custom reporting |

0 |

2 |

5 |

|

Supports reduction |

0 |

1 |

6 |

|

Storage weight |

0 |

0 |

7 |

|

At rest server-side encryption with self-managing keys |

0 |

0 |

7 |

Table 2: The criteria rated least important by the 7 groups.

Discussion: The first thing to note is that these are the results of 7 groups playing the game and it shouldn’t be inferred that they are the views of the overall digital preservation community. But, the results do raise questions worth exploring.

- One player surmised that some of the CAN DO WITHOUT ranking was caused by not understanding criteria. It’s worth exploring if there should be more educational material around some of the criteria ranked as less important.

- Each player took on a role for the game. Would we see similar results if people played from the perspective of their actual institutional role? (Note that the feedback at the workshop was that they liked having a role randomly assigned to them.)

- Especially for the criteria the players disagreed on the most, what do we actually know about their importance? Some concepts, such as the importance of diverse storage media types, have been presented in the digital preservation literature as good practice, but what is the evidence for this?

- Some criteria were consistently rated important across the games, despite the different player roles. Does this suggest that there are some criteria that should be considered regardless of institutional context? It could suggest reframing the criteria as a subset that is always important, and then different sets to consider based on context.

We intend to discuss these game results to determine how we can use them to refine the Criteria.

If you are still reading – consider getting involved by joining the dpstorage group (https://groups.google.com/forum/#!forum/dpstorage). Also be on the lookout for a downloadable version of the Criteria game in the next month or so!